On episode #15 we briefly discussed

“Big Data. Is it real or a buzzword?”

Zack and Bill tied Big Data’s definition to volume. Jordan also mentioned volume of data, and started to say something about technology. My position was (and remains), “I have no idea. I’ll deal with whatever my clients hand me.”

(Scroll to the bottom of this page to see the discussion.)

As Data Science and Big Data increase in sexiness, getting a definition for Big Data is something akin to asking questions of the Cheshire Cat in Alice in Wonderland, the response is likely to be riddles and more confusion.

Recently, Jenna Dutcher published an interesting blogpost compiling 43 responses to the question:

43 Definitions of Big Data

Jenna is community relations manager for datascience@berkeley, UC Berkeley School of Information’s online masters in data science.

The 43 responses came from a good mix of people; university professors, data scientists, CIOs, an economist, a science blogger … an overall good group of people who know their way around data. As I read through their definitions I noticed some recurring themes:

- A few were reluctant to answer

- Complexity

- Requiring new skill or methods

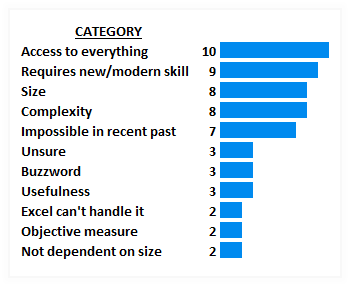

I decided to parse the responses into categories, allowing a definition to fit into multiple categories; e.g., if a definition included size and complexity, I added it to both categories.

QUICK-&-DIRTY SUMMARY OF THE BIG DATA DEFINITIONS

11 Categories

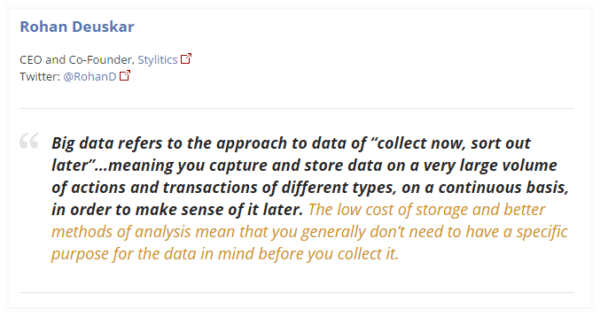

10 responses describe Big Data as a condition more than a thing. The Access to Everything category summarizes the “if you want it, come and get it” perspective. Like this quote from Rohan Deuskar:

MOST INTERESTING DEFINITIONS OF BIG DATA

Peter Skomoroch, formerly with LinkedIn:

… Many features and signals can only be observed by collecting massive amounts of data (for example, the relationships across an entire social network), and would not be detected using smaller samples …

This points to something very interesting about working with data: do you have enough to derive meaningful insights?

Peter suggests that Big Data is a situation where you need so much data that the minimum that you need begins to create problems. Therefore, Big Data isn’t just a massive frikken amount of details just because you can get them. No. It’s got to serve a purpose and be necessary.

Deirdre Mulligan Associate Professor at UC Berkeley School of Information

Big data: Endless possibilities or cradle-to-grave shackles, depending upon the political, ethical, and legal choices we make.

That stands by itself. No comments from me.

MY FAVORITE BIG DATA DEFINITION

Raymond Yee, PhD

Software Developer at unglue.it

Big data enchants us with the promise of new insights. Let’s not forget the knowledge hidden in the small data right before us.

Ah! The “shiny new thing” alert. So, it’s not so much a definition as it is a reminder to not lose perspective. Sure, we can access all manner of data, take our inquiries down curious alleys, look for unexpected correlations, and load excessive required fields on forms, but we have to ask:

- Do we really need to?

- Is it worth the costs of collection, cleansing, storing and analyzing?

And a big concern of mine:

- There’s a shortage of people who have the skill to collect, cleanse, store and analyze the data that’s already available to us. I cover this in a blogpost: Big Data Schmig Data.

Yup! There’s knowledge hidden in the small data … if we have the means to dig it out.

CLOSING THOUGHT ABOUT BIG DATA

Similar to dealing with the Cheshire Cat, we’ve got no clear answer to what Big Data is. We have more possibilities and more questions. However, for the moment, let’s agree that Big Data is a condition. Most of us won’t ever lay hands on it.

The real issue of Big Data is the information that’s available to those who do have the resources to access anything they want (ethically or unethically), manage the complexity, do the analysis, and use means that weren’t available yesterday. That impacts people who don’t sling data, and openly hate Excel. Privacy, identity theft, and those creepy ads that follow you from website to website … that’s the wrong side, the dark side of whatever we call Big Data: when a regular person starts wondering,”how the hell do they do that, and why can’t I make it stop?”

And that brings us back to Deirdre Mulligan’s definition: endless possibilities or cradle-to-grave shackles.

Cheshire Cat image courtesy of nightgrowler

- 01: Data Perspectives From An Unwitting Analyst - January 28, 2016

- Introduction – Excel In The Wild - December 6, 2015

- Oz’s Memories from PASS BAC 2015 - May 7, 2015

For me, Big Data is both a thing and a concept. The thing part of it is a technology. And for what it’s worth, that part is real: for example, Big Data refers to a set of technologies that allow us to data analysis on a much grander, and more significant scale. Azure, for instance, is a Big Data technology. PowerPivot is also an example. PowerPivot is useful here because there is a clear distinction between what PowerPivot can do and what normal Pivot Tables can do (although both work as an expression of OLAP… I think… maybe someone can verify this in their comments.).

The “concept” of Big Data however is mostly nonsense. You see it used interchangeably with Business Intelligence, Business Analytics, Statistical Analysis, Data Science, and the Internet of Things. Often the incorporation of novel dataset for analysis is considered Big Data. For instance, using twitter comments to predict the trajectory of a tornado. Or using health information to predict other outcomes (like, say, your ability to pay back a debt). But these ideas are only novel until they aren’t. Data is data. There’s no reason to think twitter data (or any other type of dataset) shouldn’t be used for this. At it’s core, it’s all just data analysis.

I also don’t know how I feel about Peter Skomoroch’s definition. A greater sample size reduces the chance of a Type II (false negative) error. So it can provide more confidence in the signal you’re testing for. But simply increasing the sample size to find a signal you don’t think exists in a smaller sample doesn’t seem like a good idea. The Law of Large Numbers tells the average of our observed values is more likely representative the more data we have in our sample. If a fainter signal comes about by increasing the sample size, but is mostly indiscernible in a smaller (but ultimately reasonable sized) sample, that faint signal might simply be noise. (In other words: it should be present in the smaller sample.) I’d be interested in what types of interactions are only seen on such massive scales of data. I’m willing to be wrong about this.

It’s worth pointing out here CERN has always used colossally sized datasets in its pursuit to prove the Higgs Boson. I don’t think anybody has ever referred to this work as Big Data, though it does fit the definition. But, again, their use of such large datsets as a lot to do with gaining confidence in their findings. I imagine it was because they found faint signals in their initial, smaller tests that encouraged them to push forward. Again, that finding would be consistent with what the Law of Large Numbers tells us about data analysis.